What's New in Apache Airflow 3¶

Apache Airflow 3 was officially released in April 2025, marking one of the most significant updates in Airflow's history. This release introduces numerous new features and improvements aimed at enhancing developer experience and system performance. Let's dive into these exciting updates.

The New User Interface¶

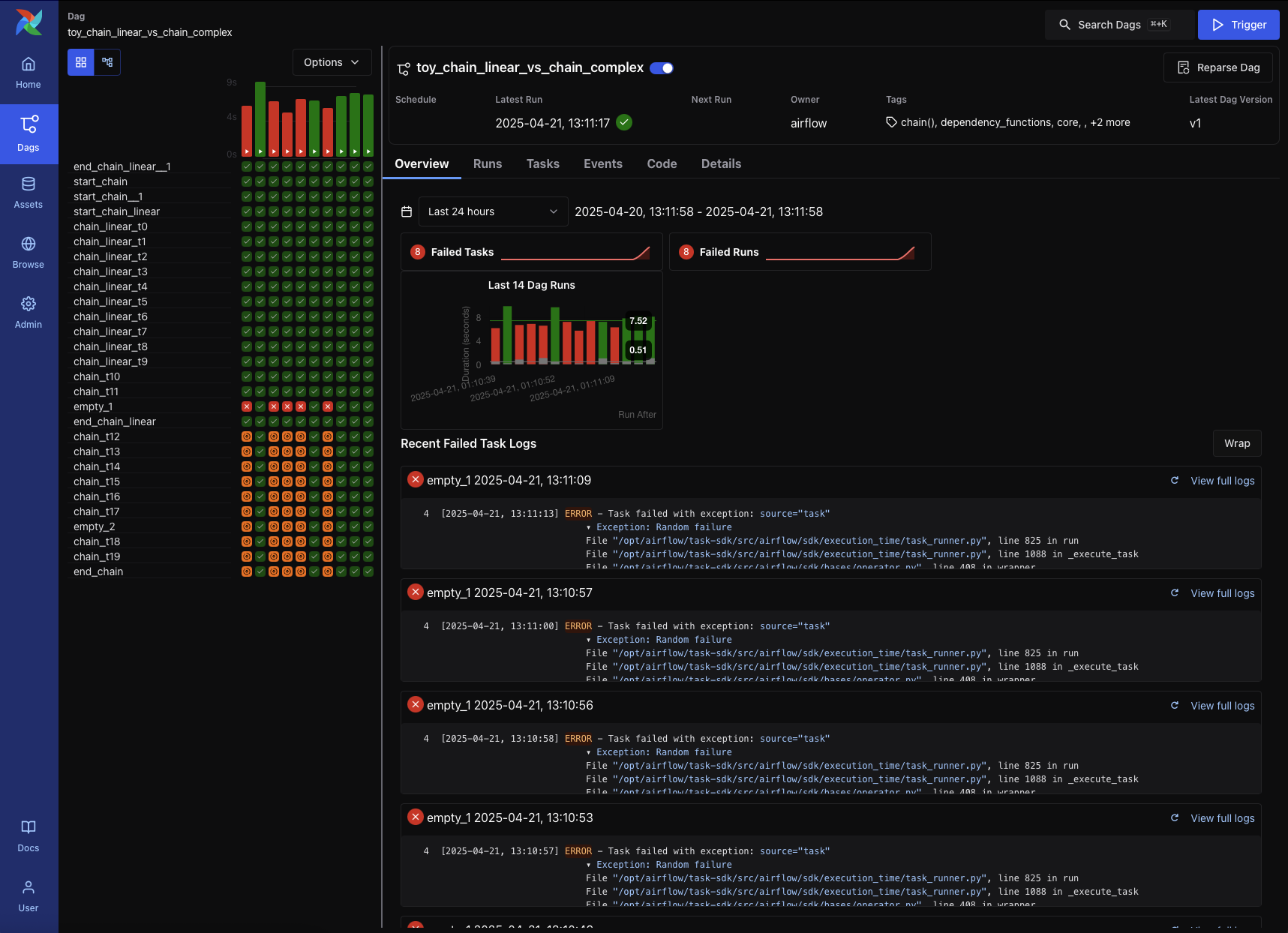

One of the most striking changes in Airflow 3 is the completely revamped user interface. Now built with React, the UI boasts a modern design, including native support for dark mode and seamless integration with Airflow 3's new asset concept. These updates not only improve usability but also provide a more visually appealing experience.

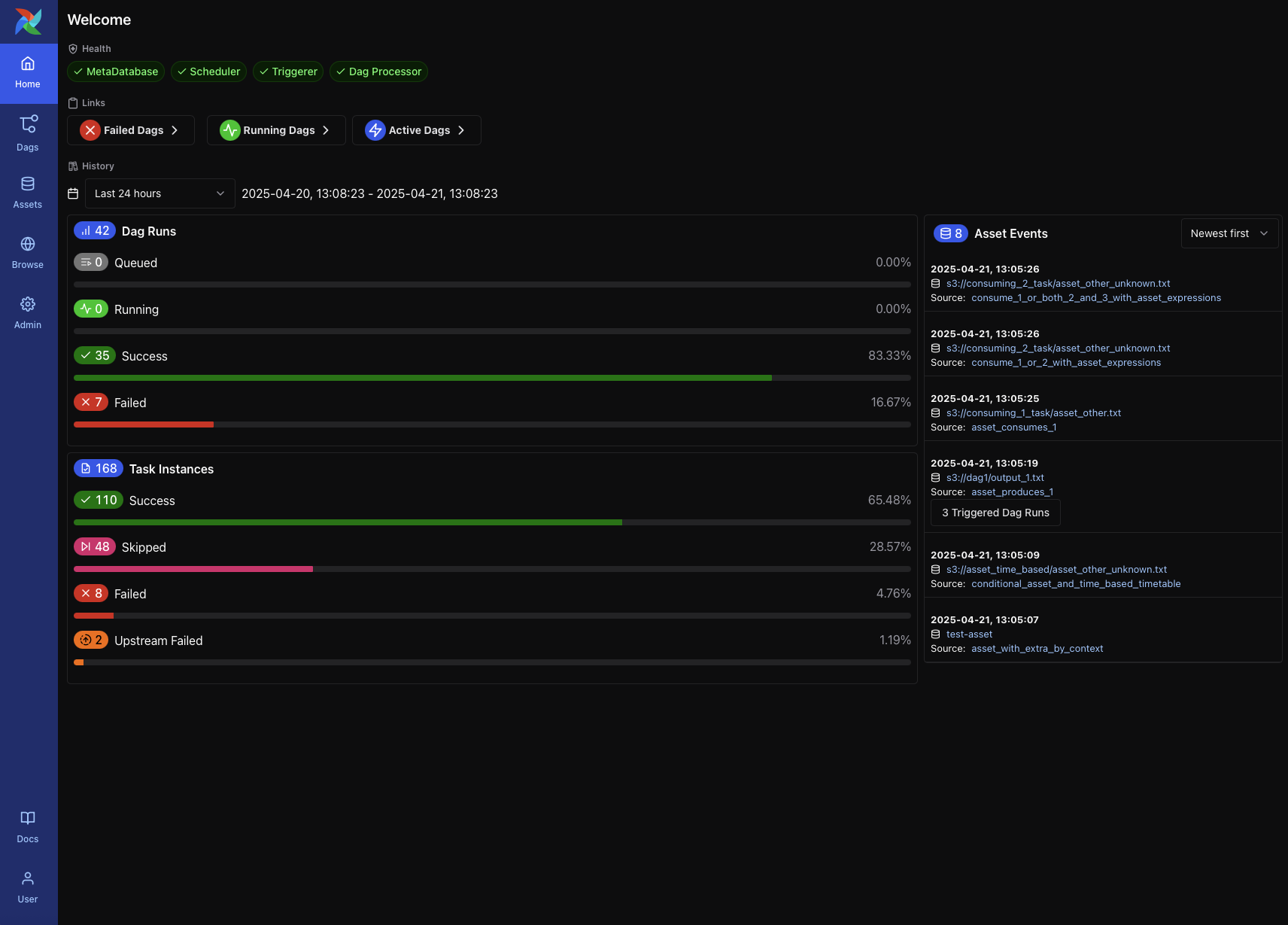

To begin with, Airflow 3 introduces a brand-new Home Page, which replaces the DAG List View as the default landing page. The Home Page now features health indicators that allow users to monitor the status of Airflow system components at a glance. Additionally, it provides quick access to Failed, Running, and Active DAGs, as well as execution statistics for DAGs and tasks over specific time intervals.

Home Page in Airflow 3

Home Page in Airflow 3

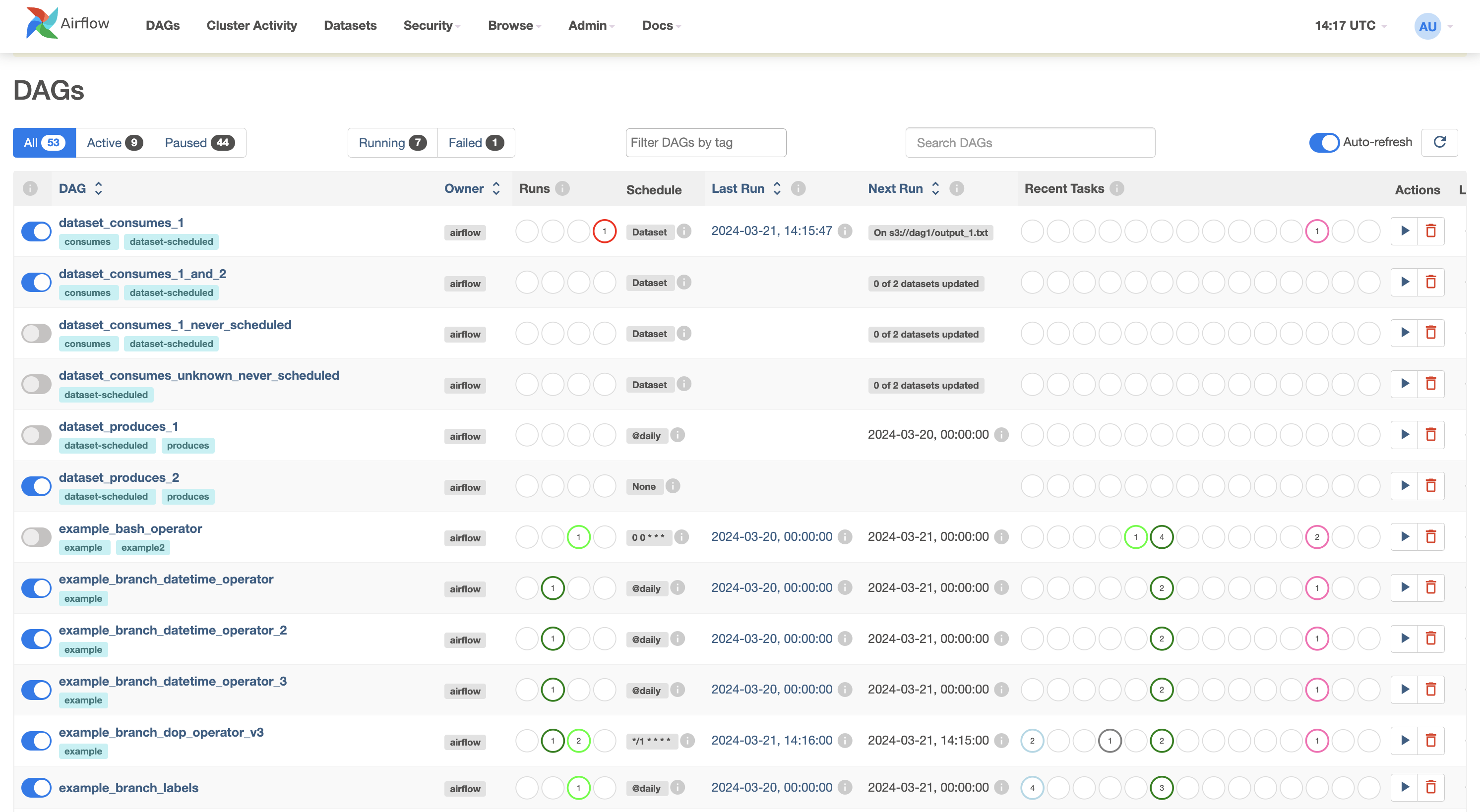

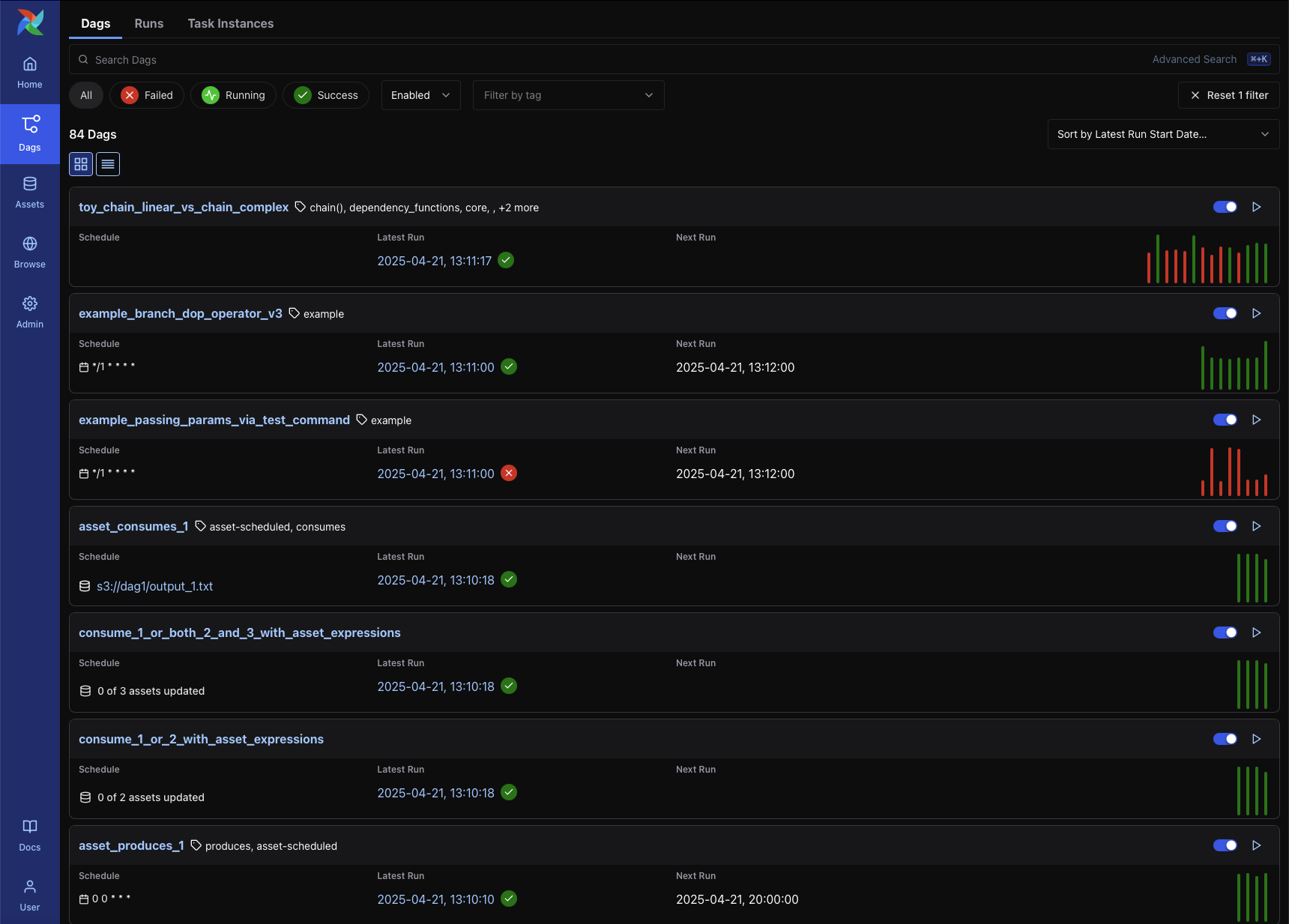

Moving on to the DAG List View, Airflow 3 replaces the circular indicators from Airflow 2 with visual bar charts that summarize recent run outcomes. These charts use color to represent success or failure and bar length to indicate execution time, making it easier for users to quickly understand the status of recent runs.

DAG List View in Airflow 2

DAG List View in Airflow 2

DAG List View in Airflow 3

DAG List View in Airflow 3

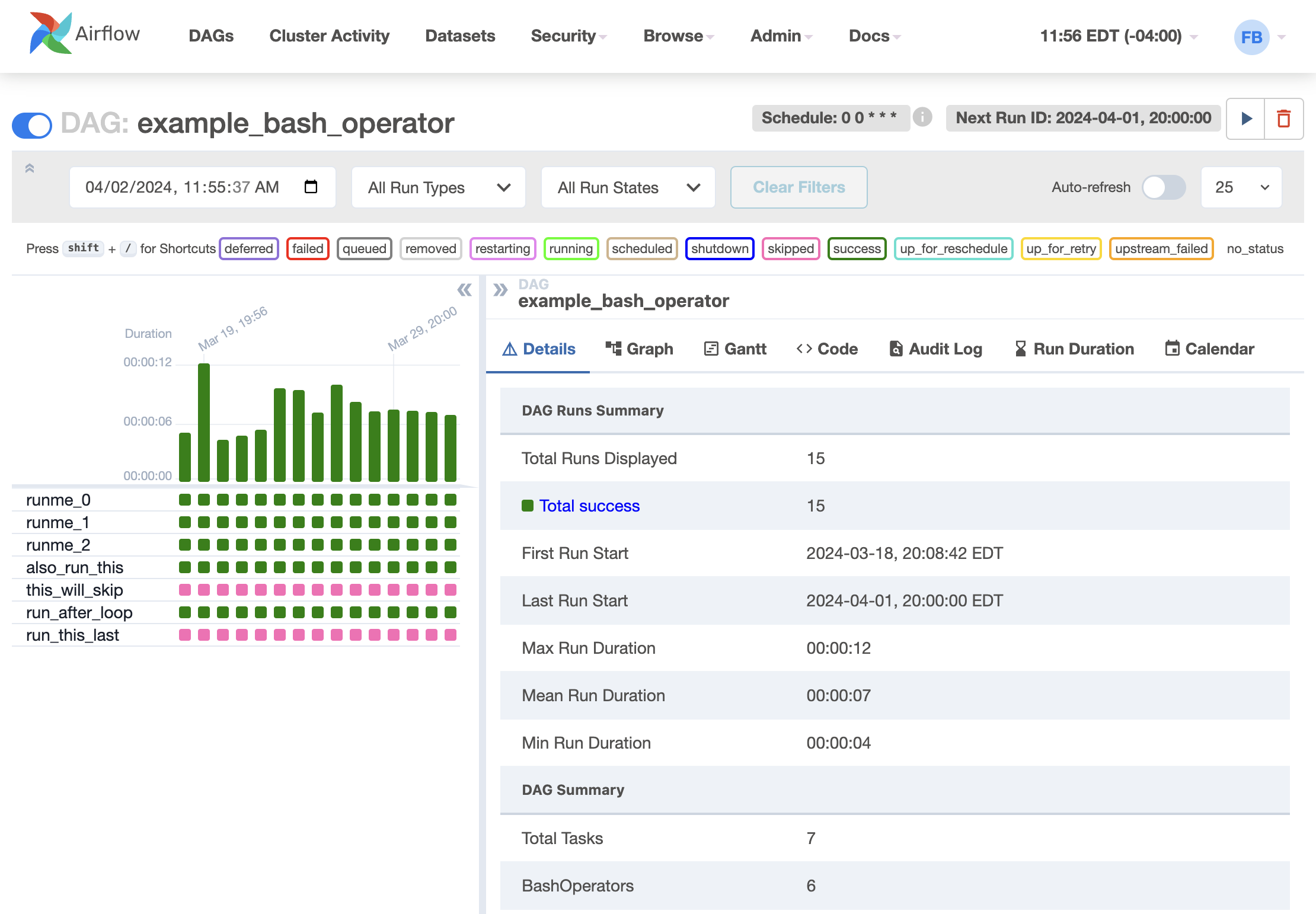

The DAG Details Page has also undergone significant improvements. In Airflow 2, the page was cluttered with excessive colors and text, and the Grid View and Graph View were displayed in separate sections, making it difficult to view detailed information alongside the graph.

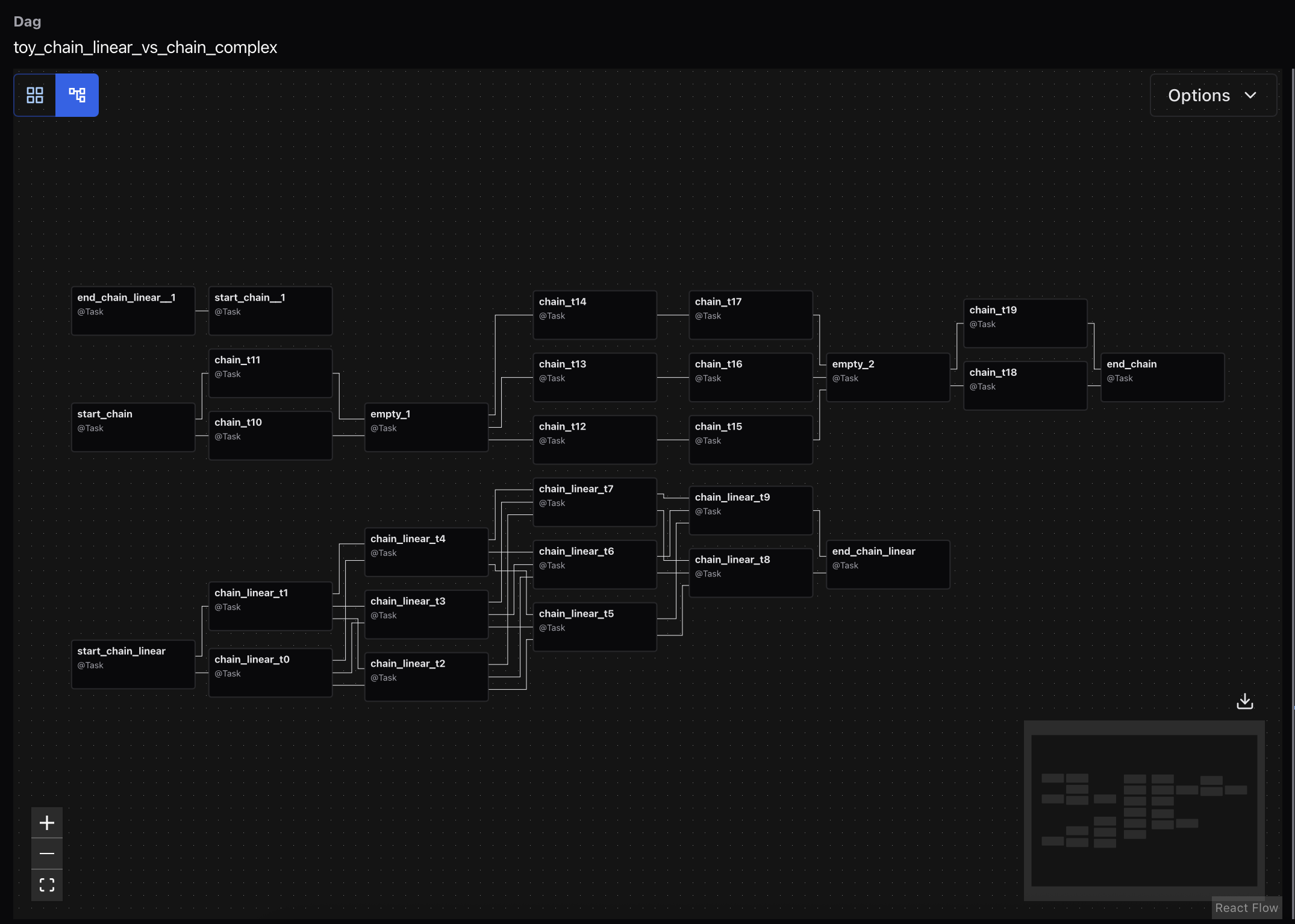

Airflow 3 addresses these issues by integrating the Grid View and Graph View into a single left-side panel. This allows users to simultaneously view detailed information about DAG runs while exploring the grid or graph. The interface has also been simplified, removing unnecessary elements to help users focus on execution details.

DAG Details Page in Airflow 2

DAG Details Page in Airflow 2

DAG Details Page in Airflow 3

DAG Details Page in Airflow 3

Graph View in DAG Details Page in Airflow 3

Graph View in DAG Details Page in Airflow 3

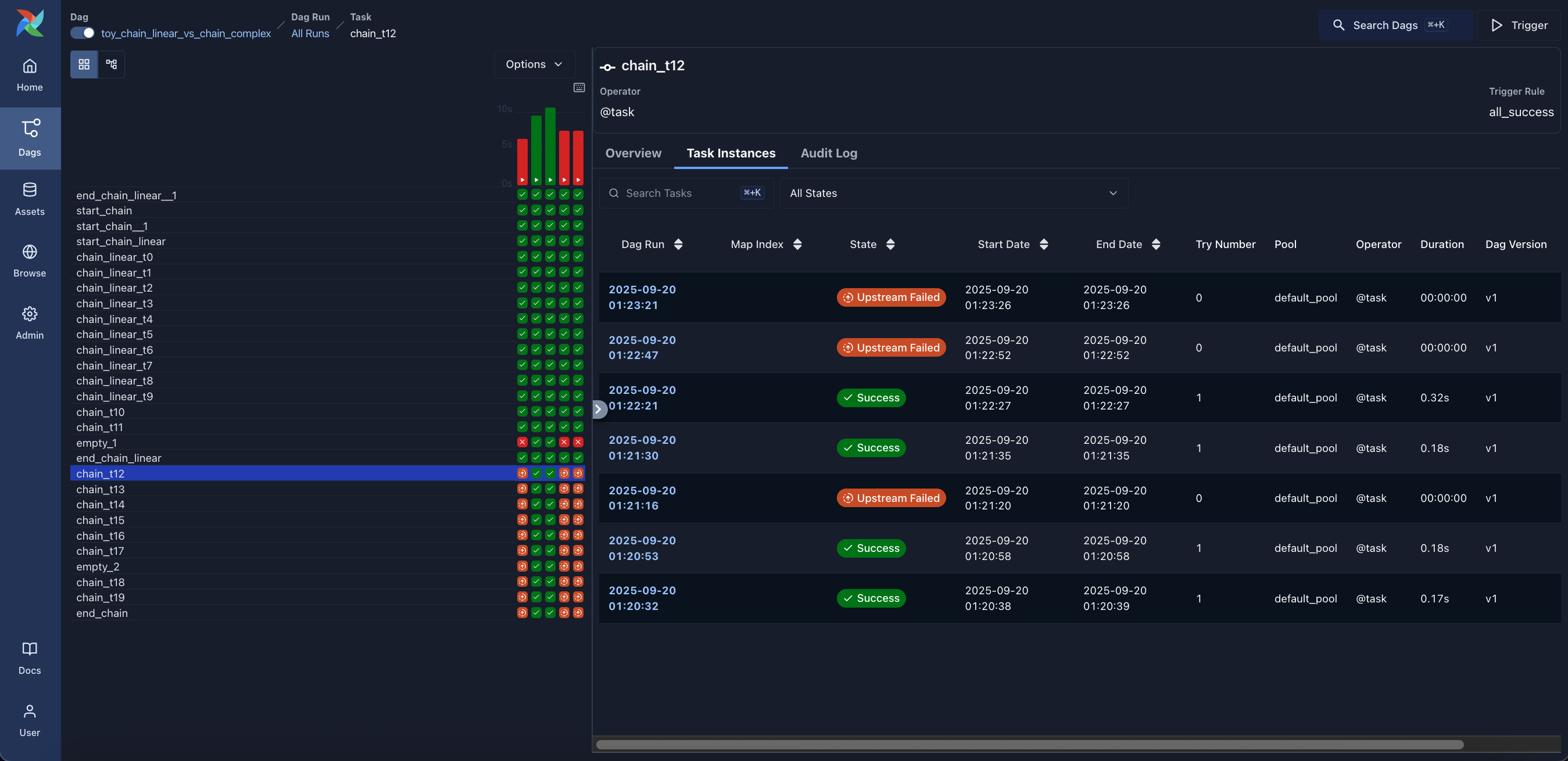

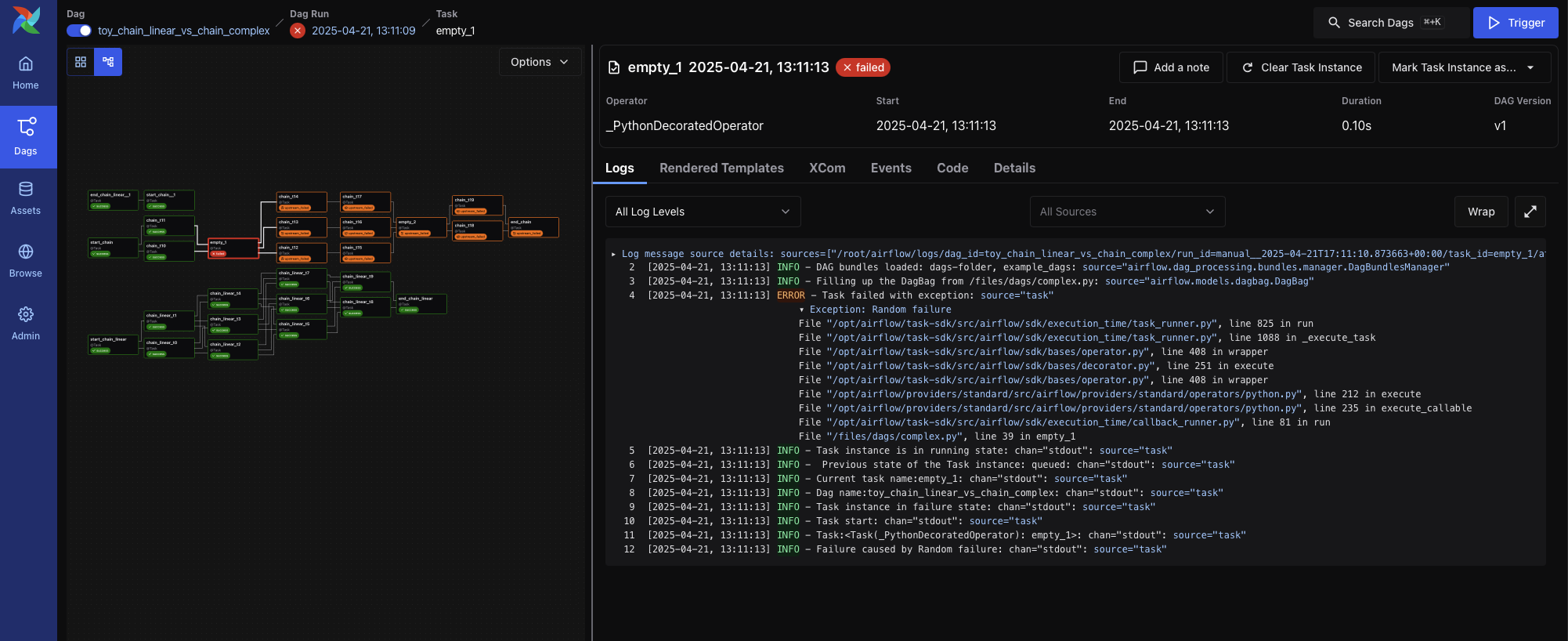

Furthermore, Airflow 3 enhances the DAG Run View by allowing users to inspect task instance details while simultaneously viewing the Grid or Graph View. Users can even switch between these views to better understand task execution statuses in a graphical format.

DAG Run View in Airflow 3

DAG Run View in Airflow 3

Task Instance View in Airflow 3

Task Instance View in Airflow 3

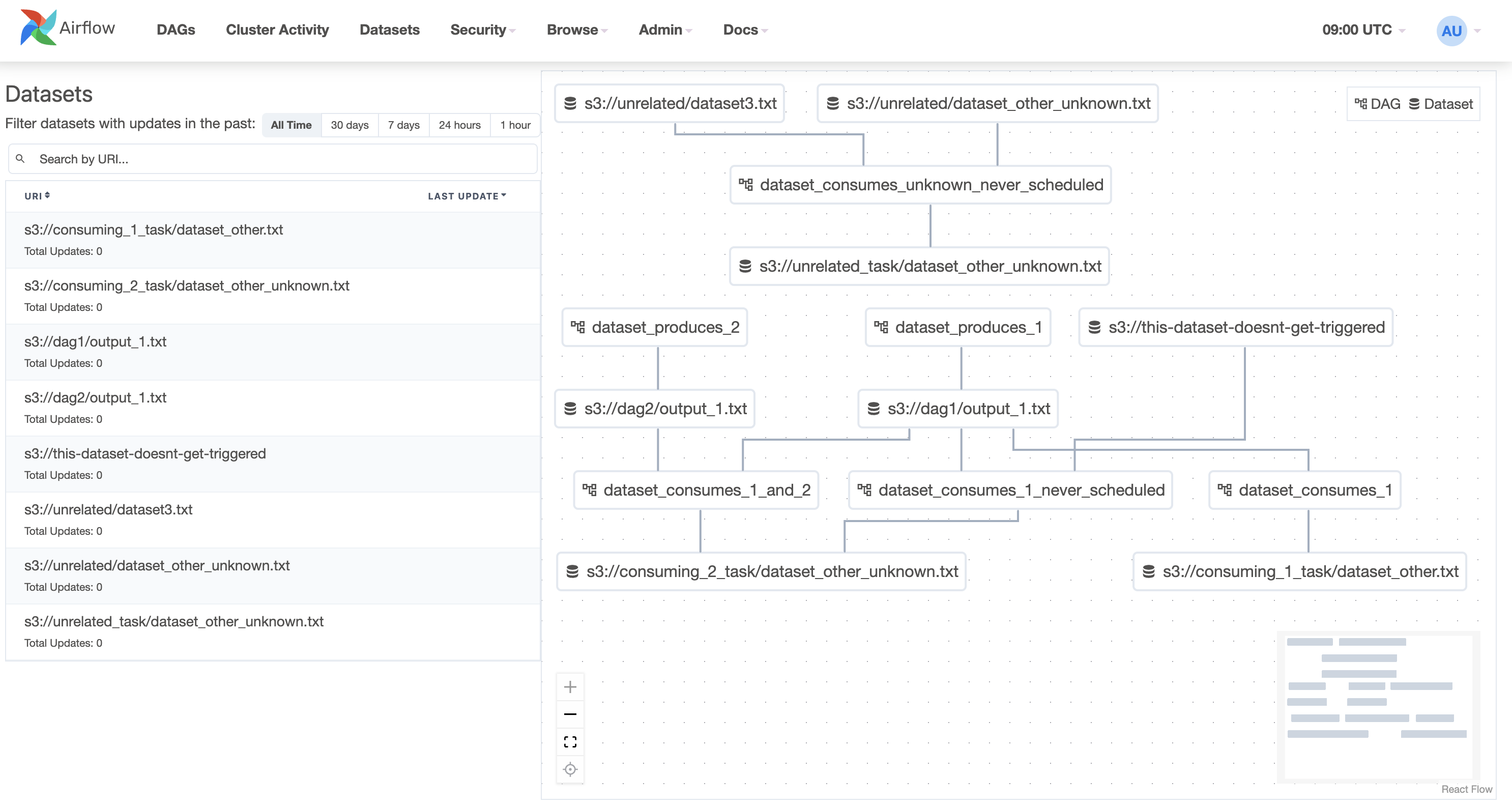

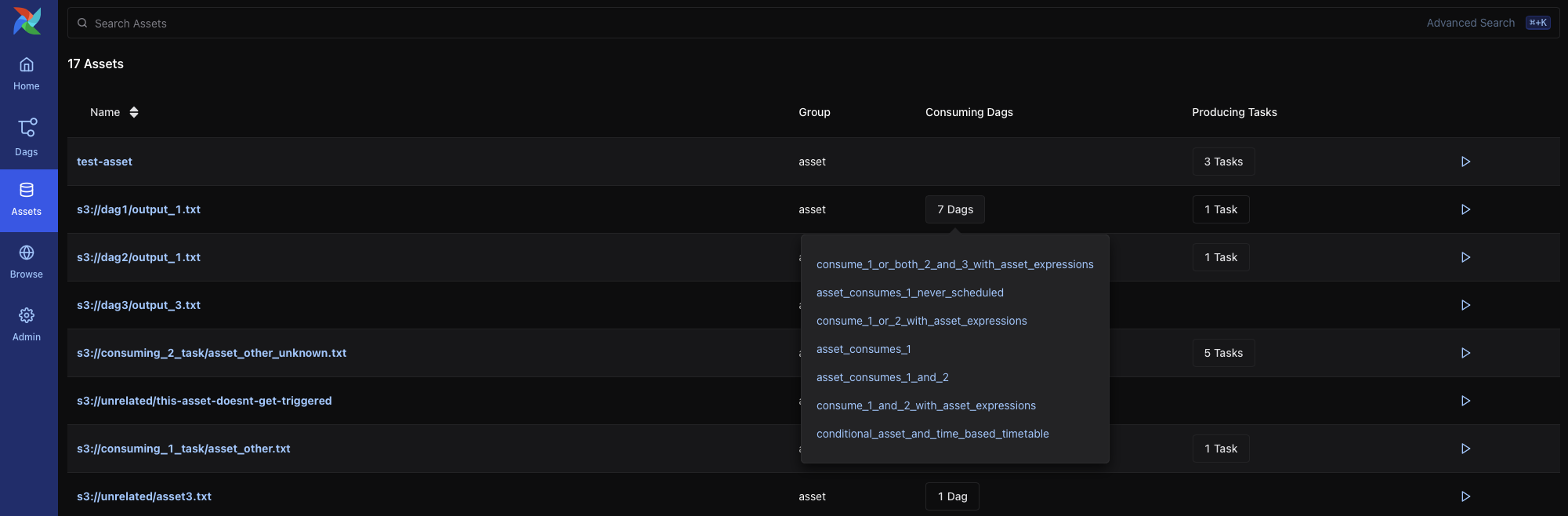

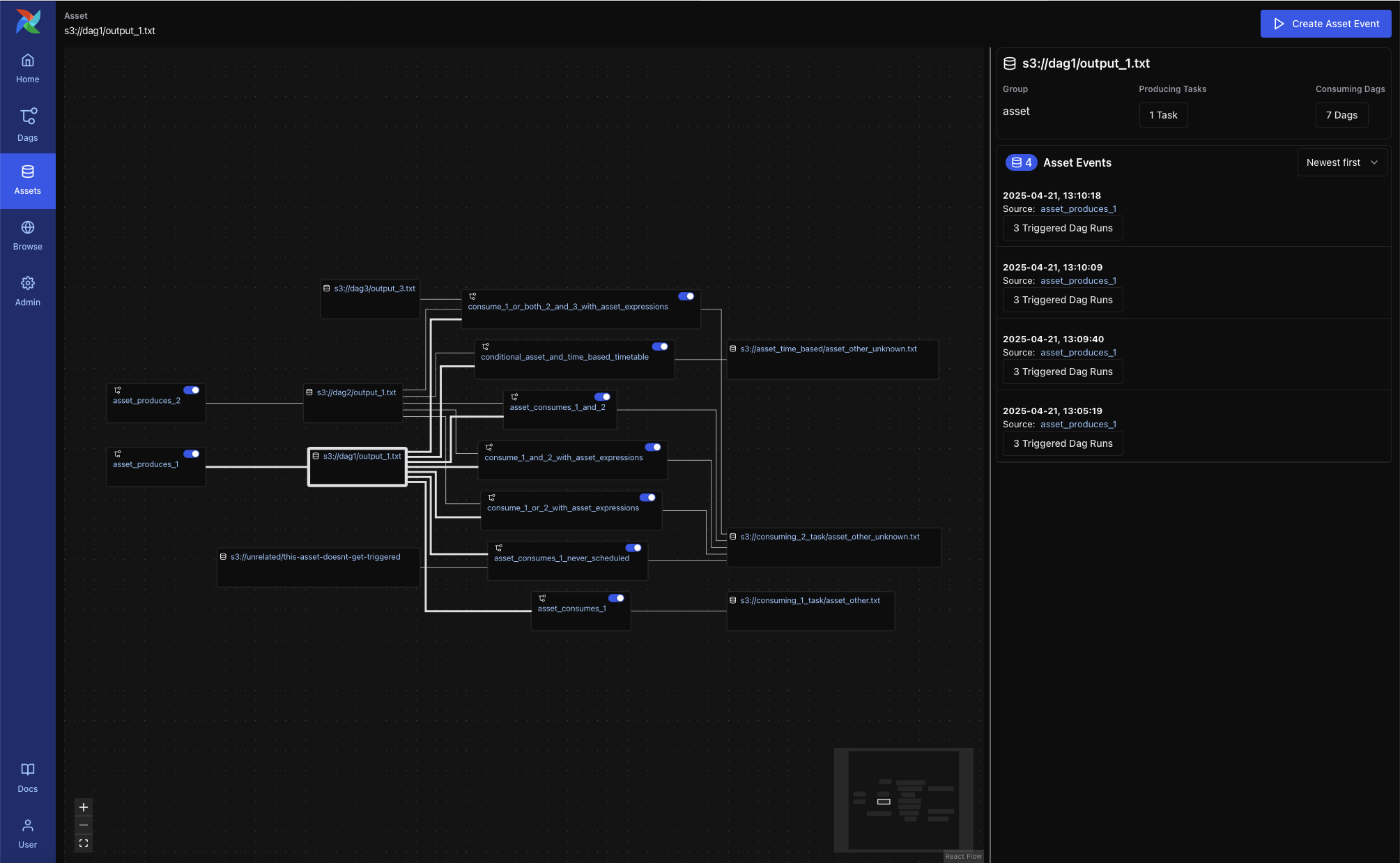

In previous versions, Airflow 2 introduced the concept of Datasets, which allowed users to track the flow of data between different DAGs. This was a significant step towards understanding data lineage and dependencies. However, with the release of Airflow 3, the Dataset feature has been upgraded and rebranded as the Asset concept, providing a more comprehensive and flexible approach to data and task management.

The new Asset List View offers a global overview of all known assets, organizing them by name and displaying which tasks produce them and which DAGs consume them. This clear organization helps users quickly understand the relationships and dependencies between different assets across the platform.

Datasets List View in Airflow 2

Datasets List View in Airflow 2

Asset List View in Airflow 3

Asset List View in Airflow 3

The Asset Graph View takes this a step further by providing a contextual map of an asset's lineage. Users can explore upstream producers and downstream consumers of an asset, trigger asset events manually, and inspect recent asset events and the DAG runs they initiated. This comprehensive view offers deep insights into data flow and dependencies.

Asset Graph View in Airflow 3

Asset Graph View in Airflow 3

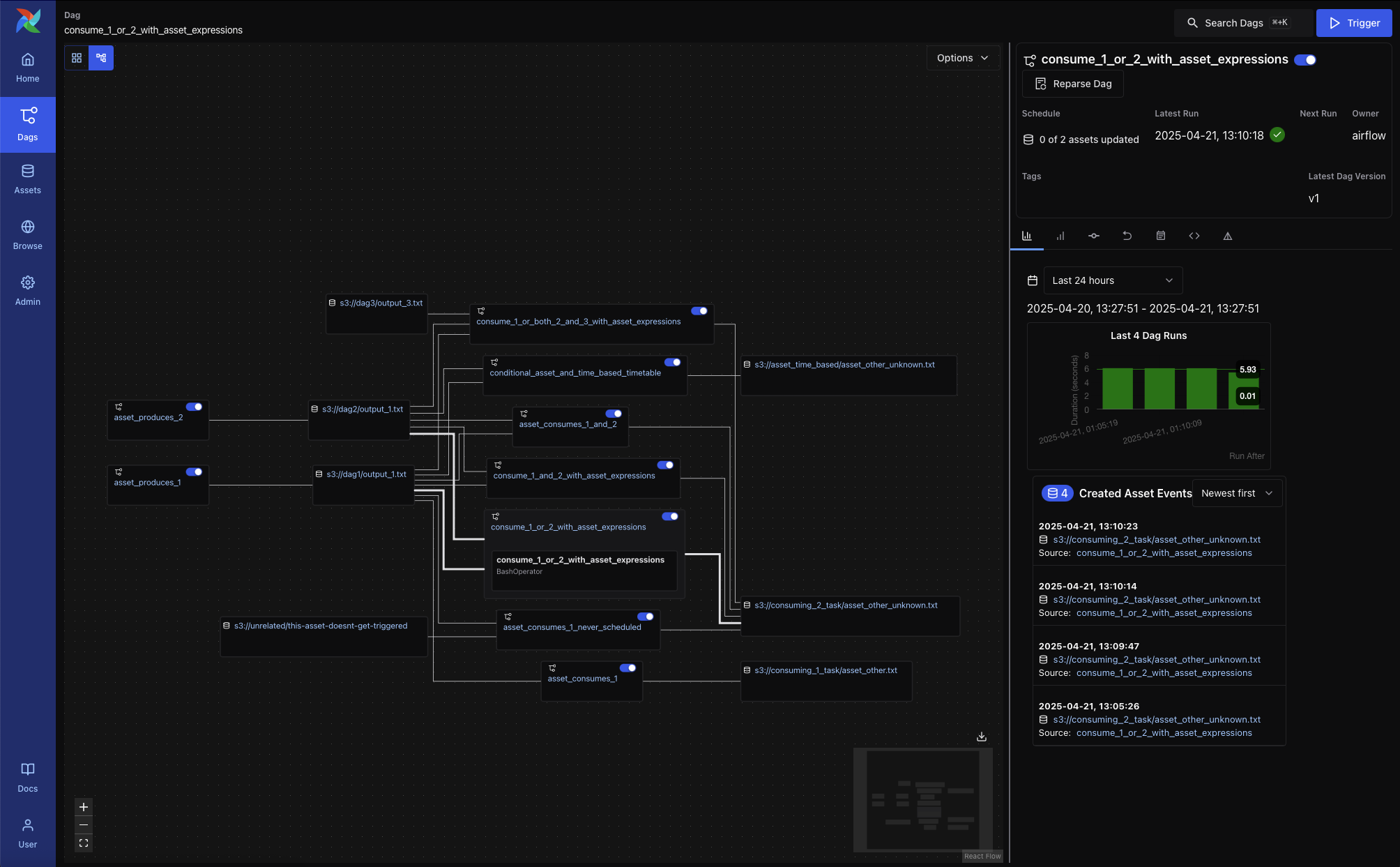

Finally, when working within a DAG, the DAG Graph View with Asset Overlays integrates asset awareness directly into the task dependency graph. This means users can visualize how assets flow between DAGs and uncover asset-triggered dependencies, all within the familiar DAG graph view. This seamless integration completes the picture of how data moves throughout workflows, from task-level execution to broader asset relationships.

Graph Overlays in DAG Graph View in Airflow 3

Graph Overlays in DAG Graph View in Airflow 3

The reimagined Asset system in Airflow 3 provides users with powerful tools to manage and understand their data workflows better than ever before.

Architecture Changes and Task Isolation¶

In Airflow 2, every system component, including the Scheduler, Workers, Webserver, and CLI, could directly communicate with the Metadata Database. This design had a clear advantage: it was simple and straightforward. All components coordinated through a single source of truth, ensuring strong consistency across the system. The Scheduler could write task states directly, Workers could report results immediately, and the Webserver could display the most up-to-date DAG and task information. As a result, users only needed to maintain one reliable database to keep the entire system running smoothly.

However, this architecture also introduced several challenges. Since all components relied on the same database, it could quickly become a performance bottleneck as the number of DAGs and tasks grew. Under high concurrency, competition for database locks between Schedulers and Workers could lead to delays or even deadlocks. In addition, task code had the ability to access the database directly, which reduced isolation and increased the risk of bugs or malicious code affecting the entire system. Finally, the tight coupling between the database schema and all components made upgrades more difficult and increased operational costs, while also limiting observability and extensibility.

With Airflow 3, these issues were addressed through the introduction of a dedicated API Server. Instead of allowing components to connect to the Metadata Database directly, all interactions now flow through the API Server. This reduces the load on the database, prevents lock contention, and improves system scalability. It also strengthens security by ensuring that task code can no longer modify the core database directly, thereby improving isolation. At the same time, the new API Server, built on FastAPI, delivers higher performance and more consistent interfaces. It also enables new capabilities such as the Task Execution API and multi-language SDKs. Overall, Airflow 3's API Server resolves the bottlenecks and risks present in the earlier architecture while laying the foundation for a more modern and extensible system.

Source: Astronomer

Source: Astronomer

Remote Execution¶

One of the most important innovations in Airflow 3.0 is the introduction of a Task Execution API and an accompanying Task SDK.

These components enable tasks to be defined and executed independently of Airflow’s core runtime engine.

Environment decoupling: Tasks can now run in isolated, remote, or containerized environments, separate from the scheduler and workers

Why using Edge Worker? The Edge Worker is a execution option that allows you to run Airflow tasks on edge devices. The Edge Worker is designed to be lightweight and easy to deploy.

apache-airflow-providers-edge3