The Lakehouse Series: From Data Lakes to Data Lakehouses¶

TLDR

After reading this article, you will learn:

- What limitations traditional data lakes face

- How data lakehouses merge the flexibility of data lakes with the structured management of data warehouses

- What enterprise-grade capabilities define lakehouse architecture

- What the major open-source lakehouse formats are

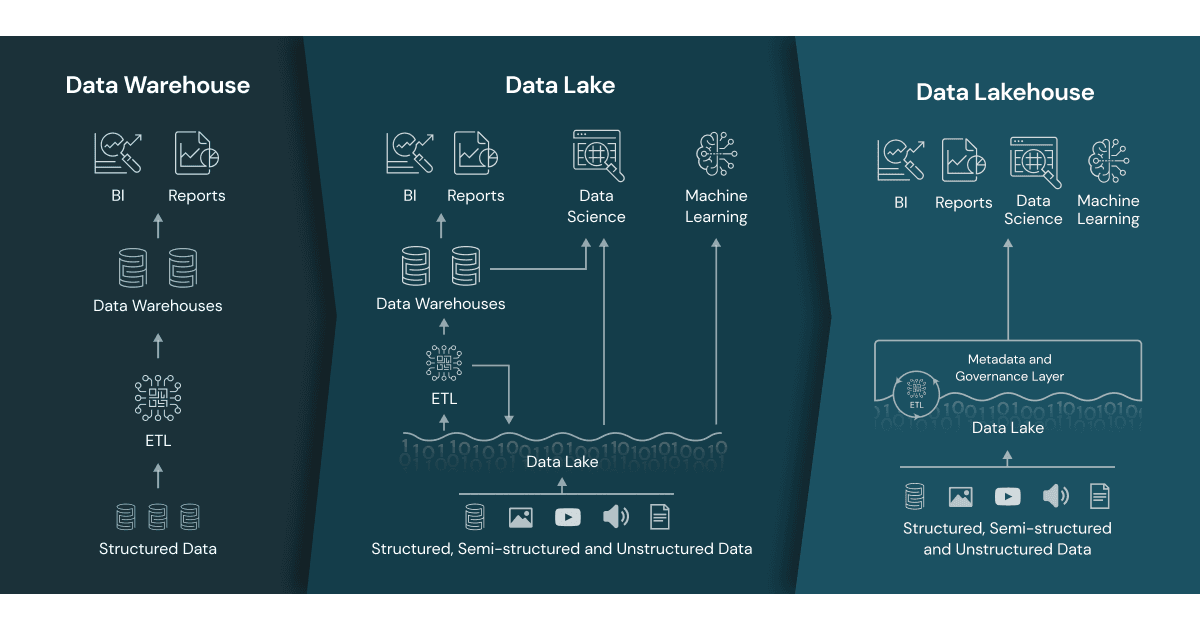

Data Architecture Evolution

Data Architecture Evolution

Challenges in Traditional Data Lakes¶

In the 2010s, Data Lakes emerged as a revolutionary concept, enabling enterprises to store vast amounts of raw data for on-demand analysis. This "store everything in a pool and let users extract what they need" approach fundamentally differed from the traditional data warehouse paradigm of "ETL first, then store." While many companies implemented Data Lakes using Hadoop/HDFS, they still couldn't resolve critical issues around data governance, transactional support, and query performance. Over time, the limitations of Data Lakes became increasingly apparent, particularly in scenarios requiring efficient querying and robust data governance. Traditional data lakes often suffer from issues such as:

- Absence of Transactional Support: Data lakes cannot guarantee atomicity, consistency, isolation, and durability (ACID) properties, making it challenging to handle concurrent operations reliably.

- Poor Data Quality Enforcement: Without built-in validation mechanisms, data lakes struggle to maintain data integrity and prevent the ingestion of corrupt or invalid data.

- Consistency and Isolation Issues: The inability to properly isolate read and write operations creates conflicts when mixing batch processing, streaming workloads, and real-time queries.

- Limited Interoperability: Data lakes are frequently bound to particular processing engines, restricting the choice of analytical tools and creating vendor lock-in situations. For instance, traditional data lake implementations (such as Hive Metastore with HDFS) were primarily designed for Spark or Hive ecosystems.

These pitfalls highlight the need for a more robust solution to manage and query data effectively.

The Emergence of Data Lakehouses¶

Data lakehouses represent the natural evolution from traditional data lakes, addressing their inherent limitations while preserving their core benefits. In 2020, Databricks introduced the lakehouse concept as a hybrid architecture that merges the flexible storage capabilities of data lakes with the structured management and transactional guarantees of data warehouses. This convergence enables organizations to maintain their existing data lake investments while gaining enterprise-grade features previously exclusive to warehouse environments.

The lakehouse architecture is characterized by several key capabilities:

- ACID Transactional Capabilities: Enterprise lakehouses require robust support for concurrent read and write operations across multiple data pipelines without compromising data integrity.

- Data Structure Validation and Evolution: The platform must enforce data schemas while allowing flexible evolution, accommodating traditional warehouse patterns like star and snowflake designs.

- Direct Business Intelligence Integration: Lakehouses allow analytical tools to query source data directly without requiring intermediate data movement or transformation.

- Independent Scaling of Storage and Processing: By separating storage from computational resources, the architecture can accommodate unlimited concurrent users and handle massive datasets efficiently.

- Standards-Based Accessibility: Built on open formats like Parquet with standardized APIs, enabling seamless integration with diverse analytical tools, ML frameworks, and programming environments.

- Multi-Modal Data Handling: Accommodates everything from raw, unstructured content to highly organized structured datasets within a unified platform.

- Versatile Processing Capabilities: Supports varied analytical needs including data science workflows, machine learning model development, SQL analytics, and business reporting.

- Native Real-Time Data Processing: Enables continuous data ingestion and immediate availability for live dashboards and real-time enterprise reporting requirements.

Since Databricks introduced the Data Lakehouse concept in 2020, cloud providers have rapidly adopted and implemented these capabilities across their platforms. This widespread adoption reflects the growing recognition of lakehouses as the next evolution in data architecture. The momentum behind this shift is evidenced by Forrester Wave's 2024 report, which evaluated cloud vendors' Data Lakehouse offerings across 24 comprehensive dimensions. Based on assessments of both current capabilities and strategic vision, three companies emerged as Leaders: Databricks, Google, and Snowflake. The report reinforces that Data Lakehouse represents an inevitable evolution in the data landscape.

In the open source realm, projects like Apache Hudi, Apache Iceberg, and Delta Lake continue to evolve, providing their respective solutions to implement Data Lakehouse functionality. These projects are constantly enhancing their capabilities. Even in 2025, DuckDB launched DuckLake, attempting to define a new Data Lakehouse standard that uses databases for catalog and metadata management, rather than the blob storage management approach employed by the other three formats.

Data Lakehouse Open Formats¶

Table formats like Apache Hudi, Apache Iceberg, and Delta Lake address these challenges by introducing:

- ACID Transactions: Ensuring data consistency and reliability during writes and updates.

- Schema Evolution Support: Allowing seamless changes to data schemas without breaking downstream processes.

- Efficient Metadata Management: Leveraging manifest or log files to optimize query planning and execution.

- Multi-Engine Compatibility: Enabling interoperability across various processing engines like Spark, Flink, and Trino.

These lakehouse formats bridge the gap between raw data storage and structured data management, enabling organizations to build scalable, high-performance, and reliable lakehouse architectures.

While each format follows its own design philosophy and implementation approach, they all share a common goal: overcoming the limitations of traditional data lakes and providing a unified foundation for analytics and machine learning workloads.

I really enjoyed Alex Merced’s presentation on "The Who, What and Why of Data Lake Table Formats (Iceberg, Hudi, Delta Lake)". As the co-author of "Apache Iceberg: The Definitive Guide", he offered a great overview of the three formats. You can watch the full talk below:

References¶

- What Is a Lakehouse?

- Tampa Bay DE Meetup - The Who, What and Why of Data Lake Table Formats (Iceberg, Hudi, Delta Lake)

- The Forrester Wave™: Data Lakehouses, Q2 2024

- DuckLake: SQL as a Lakehouse Format